前言

Datawhale组织了一次人工智能相关的线上夏令营活动

一共分为三个赛道:机器学习、自然语言处理、计算机视觉

由于我还是个菜菜,就选择了机器学习赛道来练习一下基础

赛事任务

初赛提供了电炉17个温区的实际生产数据,分别是电炉上部17组加热棒设定温度T1-1~T1-17,电炉下部17组加热棒设定温度T2-1~T2-17,底部17组进气口的设定进气流量V1-V17,选手需要根据提供的数据样本构建模型,预测电炉上下部空间17个测温点的测量温度值。

数据说明

1)进气流量

2)加热棒上部温度设定值

3)加热棒下部温度设定值

4)上部空间测量温度

5)下部空间测量温度

每项数据各17个

分析

赛题给出的是根据前三项数据预测后两项数据,但在给出的数据集中(可能)还有一项数据为时间信息(日期+时间),不确定是否会对结果产生实质性的作用

Baseline分析

baseline主要运用了sklearn和lightgbm机器学习模型进行数据处理和训练,相比于是之前使用的pytorch,更加适合机器学习数据挖掘的任务,也就是本赛题,pytorch多用于深度学习网络的构建和训练。

point

- 使用submit["序号"] = test_dataset["序号"]对最后提交的数据及进行序号对齐

- 使用sklearn中的train_test_split模块进行训练集和验证集的分割

- 定义了time_feature函数用于时间数据的处理,而我之前没有考虑到时间因素便直接给Drop掉了

上分技巧

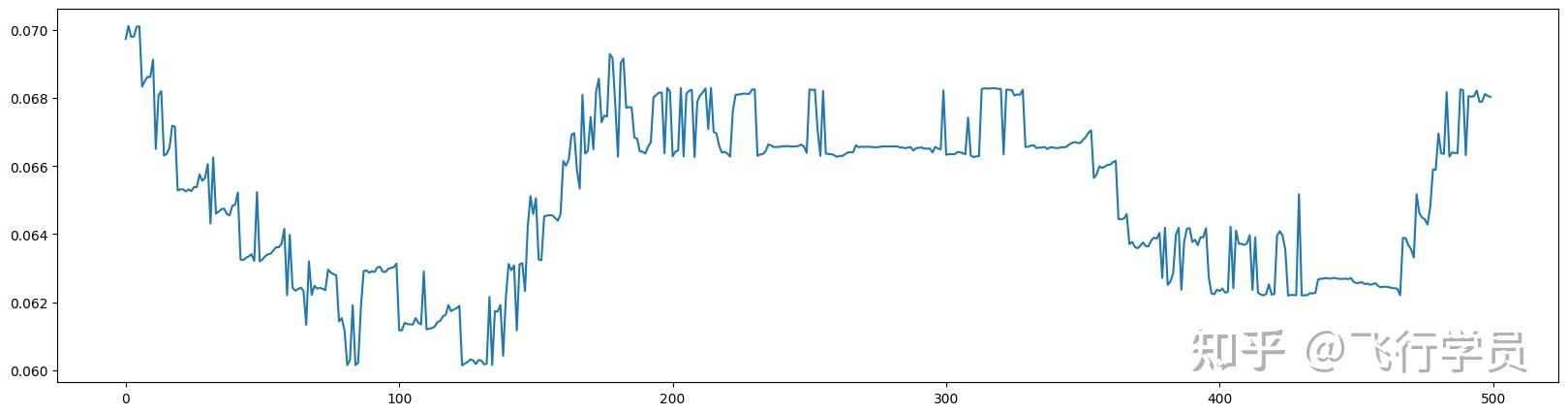

首先要选用合适的模型,其次要合理调整超参。我在调整超参时主要是通过观察训练集和验证集的loss来查看模型的拟合程度,进而对超参进行调整。

代码

在没看到Baseline之前,也尝试过使用多层网络来做,但是出现了过拟合现象,最好成绩才跑到了7.9分(预估MAE为4.9)

贴一下之前的代码:

#!/usr/bin/env python

# coding: utf-8

# In[25]:

import pandas as pd

import torch

import os

# In[26]:

pd.read_csv('train.csv')

# In[27]:

config = {

'lr': 6e-3,

'batch_size': 256,

'epoch': 180,

'device': 'cuda' if torch.cuda.is_available() else 'cpu',

'res': ['上部温度'+str(i+1) for i in range(17)] + ['下部温度'+str(i+1) for i in range(17)],

'ignore':['序号', '时间'],

'cate_cols': [],

'num_cols': ['上部温度设定'+str(i+1) for i in range(17)] + ['下部温度设定'+str(i+1) for i in range(17)] + ['流量'+str(i+1) for i in range(17)],

'dataset_path': './',

'weight_decay': 0.01

}

# In[28]:

raw_data = pd.read_csv(os.path.join(config['dataset_path'], 'train.csv'))

test_data = pd.read_csv(os.path.join(config['dataset_path'], 'test.csv'))

raw_data = pd.concat([raw_data, test_data])

raw_data

# In[28]:

# In[29]:

for i in raw_data.columns:

print(i,"--->\t",len(raw_data[i].unique()))

# In[30]:

def oneHotEncode(df, colNames):

for col in colNames:

dummies = pd.get_dummies(df[col], prefix=col)

df = pd.concat([df, dummies],axis=1)

df.drop([col], axis=1, inplace=True)

return df

# In[31]:

raw_data

# In[32]:

# 处理离散数据

for col in config['cate_cols']:

raw_data[col] = raw_data[col].fillna('-1')

raw_data = oneHotEncode(raw_data, config['cate_cols'])

# 处理连续数据

for col in config['num_cols']:

raw_data[col] = raw_data[col].fillna(0)

# raw_data[col] = (raw_data[col]-raw_data[col].min()) / (raw_data[col].max()-raw_data[col].min())

# In[33]:

for i in raw_data.columns:

print(i,"--->\t",len(raw_data[i].unique()))

# In[34]:

raw_data

# In[35]:

raw_data.drop(config['ignore'], axis=1, inplace=True)

# In[36]:

all_data = raw_data.astype('float32')

# In[37]:

all_data

# In[ ]:

# 暂存处理后的test数据集

test_data = all_data[pd.isna(all_data['下部温度1'])]

test_data.to_csv('./one_hot_test.csv')

# In[ ]:

# In[ ]:

train_data = all_data[pd.notna(all_data['下部温度1'])]

# In[ ]:

# 打乱

train_data = train_data.sample(frac=1)

train_data.shape

# In[ ]:

# 分离目标

train_target = train_data[config['res']]

train_data.drop(config['res'], axis=1, inplace=True)

train_data.shape

# In[ ]:

train_target.to_csv('./train_target.csv')

# In[ ]:

# 分离出验证集,用于观察拟合情况

validation_data = train_data[:800]

train_data = train_data[800:]

validation_target = train_target[:800]

train_target = train_target[800:]

validation_data.shape, train_data.shape, validation_target.shape, train_target.shape

# In[ ]:

from torch import nn

# 定义Residual Block

class ResidualBlock(nn.Module):

def __init__(self, in_features, out_features, dropout_rate=0.5):

super(ResidualBlock, self).__init__()

self.linear1 = nn.Linear(in_features, out_features)

self.dropout = nn.Dropout(dropout_rate)

self.linear2 = nn.Linear(out_features, out_features)

def forward(self, x):

identity = x

out = nn.relu(self.linear1(x))

out = self.dropout(out)

out = self.linear2(out)

out += identity

out = nn.relu(out)

return out

# In[ ]:

from torch import nn

# 定义网络结构

class Network(nn.Module):

def __init__(self, in_dim, hidden_1, hidden_2, hidden_3, weight_decay=0.01):

super().__init__()

self.layers = nn.Sequential(

nn.Linear(in_dim, hidden_1),

# # nn.Dropout(0.49),

nn.LeakyReLU(),

nn.BatchNorm1d(hidden_1),

# nn.Linear(hidden_1, hidden_2),

# nn.Dropout(0.2),

# nn.LeakyReLU(),

# nn.BatchNorm1d(hidden_2),

# nn.Linear(hidden_2, hidden_3),

# nn.Dropout(0.2),

# nn.LeakyReLU(),

# nn.BatchNorm1d(hidden_3),

nn.Linear(hidden_1, 34)

)

# 将权重衰减系数作为类的属性

self.weight_decay = weight_decay

def forward(self, x):

y = self.layers(x)

return y

# In[ ]:

train_data.shape[1]

# In[ ]:

# 定义网络

model = Network(train_data.shape[1], 256, 128, 64)

model.to(config['device'])

try:

model.load_state_dict(torch.load('model.pth', map_location=config['device']))

except Exception:

# 使用Xavier初始化权重

for line in model.layers:

if type(line) == nn.Linear:

print(line)

nn.init.kaiming_uniform_(line.weight)

# In[ ]:

train_data.columns

# In[ ]:

import torch

# 将数据转化为tensor,并移动到cpu或cuda上

train_features = torch.tensor(train_data.values, dtype=torch.float32, device=config['device'])

train_num = train_features.shape[0]

train_labels = torch.tensor(train_target.values, dtype=torch.float32, device=config['device'])

validation_features = torch.tensor(validation_data.values, dtype=torch.float32, device=config['device'])

validation_num = validation_features.shape[0]

validation_labels = torch.tensor(validation_target.values, dtype=torch.float32, device=config['device'])

# del train_data, train_target, validation_data, validation_target, raw_data, all_data, test_data

# 特征长度

train_features[1].shape

# In[ ]:

# train_features

# In[ ]:

# config['lr'] = 3e-3

# In[ ]:

from torch import optim

# 在定义优化器时,传入网络中的weight_decay参数

def create_optimizer(model, learning_rate, weight_decay):

return optim.Adam(model.parameters(), lr=learning_rate, weight_decay=weight_decay)

# 定义损失函数和优化器

# criterion = nn.MSELoss()

criterion = torch.nn.L1Loss()

criterion.to(config['device'])

optimizer = optim.Adam(model.parameters(), lr=config['lr'])

loss_fn = torch.nn.L1Loss()

# In[ ]:

def val(model, feature, label):

model.eval()

pred = model(feature)

pred = pred.squeeze()

res = loss_fn(pred, label).item()

return res

# In[ ]:

mse_list = []

# In[ ]:

# 在训练过程中添加L2正则化项

def train(model, train_features, train_labels, optimizer, criterion, weight_decay):

model.train()

for i in range(0, train_num, config['batch_size']):

end = i + config['batch_size']

if i + config['batch_size'] > train_num - 1:

end = train_num - 1

mini_batch = train_features[i: end]

mini_batch_label = train_labels[i: end]

pred = model(mini_batch)

pred = pred.squeeze()

loss = criterion(pred, mini_batch_label)

# 添加L2正则化项

# l2_reg = torch.tensor(0., device=config['device'])

# for param in model.parameters():

# l2_reg += torch.norm(param, p=2)

# loss += weight_decay * l2_reg

if torch.isnan(loss):

break

optimizer.zero_grad()

loss.backward()

optimizer.step()

# In[ ]:

# config['epoch'] = 4096

for epoch in range(config['epoch']):

print(f'Epoch[{epoch + 1}/{config["epoch"]}]')

model.eval()

train_mse = val(model, train_features[:config['batch_size']], train_labels[:config['batch_size']])

validation_mse = val(model, validation_features, validation_labels)

mse_list.append((train_mse, validation_mse))

print(f"epoch:{epoch + 1} Train_MSE: {train_mse} Validation_MSE: {validation_mse}")

model.train()

train(model, train_features, train_labels, optimizer, criterion, weight_decay=config['weight_decay'])

torch.save(model.state_dict(), 'model.pth')

# In[ ]:

import matplotlib.pyplot as plt

import numpy as np

y1, y2 = zip(*mse_list)

x = np.arange(0, len(y1))

plt.plot(x, y1, label='train')

plt.plot(x, y2, label='valid')

plt.xlabel("epoch")

plt.ylabel("Loss: MSE")

plt.legend()

plt.show()

评论 (0)