前言

参加了Datawhale的AI夏令营第二期的机器学习赛道~,没错这次还是机器学习

baseline:https://aistudio.baidu.com/aistudio/projectdetail/6598302?sUid=2554132&shared=1&ts=1690895519028

赛事任务

简单地说就是通过模型预测股票价格

看这里: https://challenge.xfyun.cn/topic/info?type=quantitative-model

数据说明

- date:日期

- time:时间戳

- close:最新价/收盘价

- amount_delta:成交量变化 从上个tick到当前tick发生的成交金额

- n_midprice:中间价 标准化后的中间价,以涨跌幅表示

- n_bidN: 买N价

- n_bsizeN:买N量

- n_ask:卖N价

- n_asize1:卖N量

- labelN:Ntick价格移动方向 Ntick之后中间价相对于当前tick的移动方向,0为下跌,1为不变,2为上涨

评估指标

采用macro-F1 score进行评价,取label_5, label_10, label_20, label_40, label_60五项中的最高分作为最终得分。

F1 score解释: https://www.9998k.cn/archives/169.html

F1 score

F1 score = 2 * (precision * recall) / (precision + recall)

precision and recall

第一次看的时候还不太懂precision和recall的含义,也总结一下

首先定义以下几个概念:

TP(True Positive):将本类归为本类

TN (True Negative) : 将其他类归为其他类

FP(False Positive):错将其他类预测为本类

FN(False Negative):本类标签预测为其他类标precision = TP / (TP + FP)

recall = TP / (TP + FN)

accuracy = (TP + TN) / (TP + TN + FP + FN)

分析

baseline分析

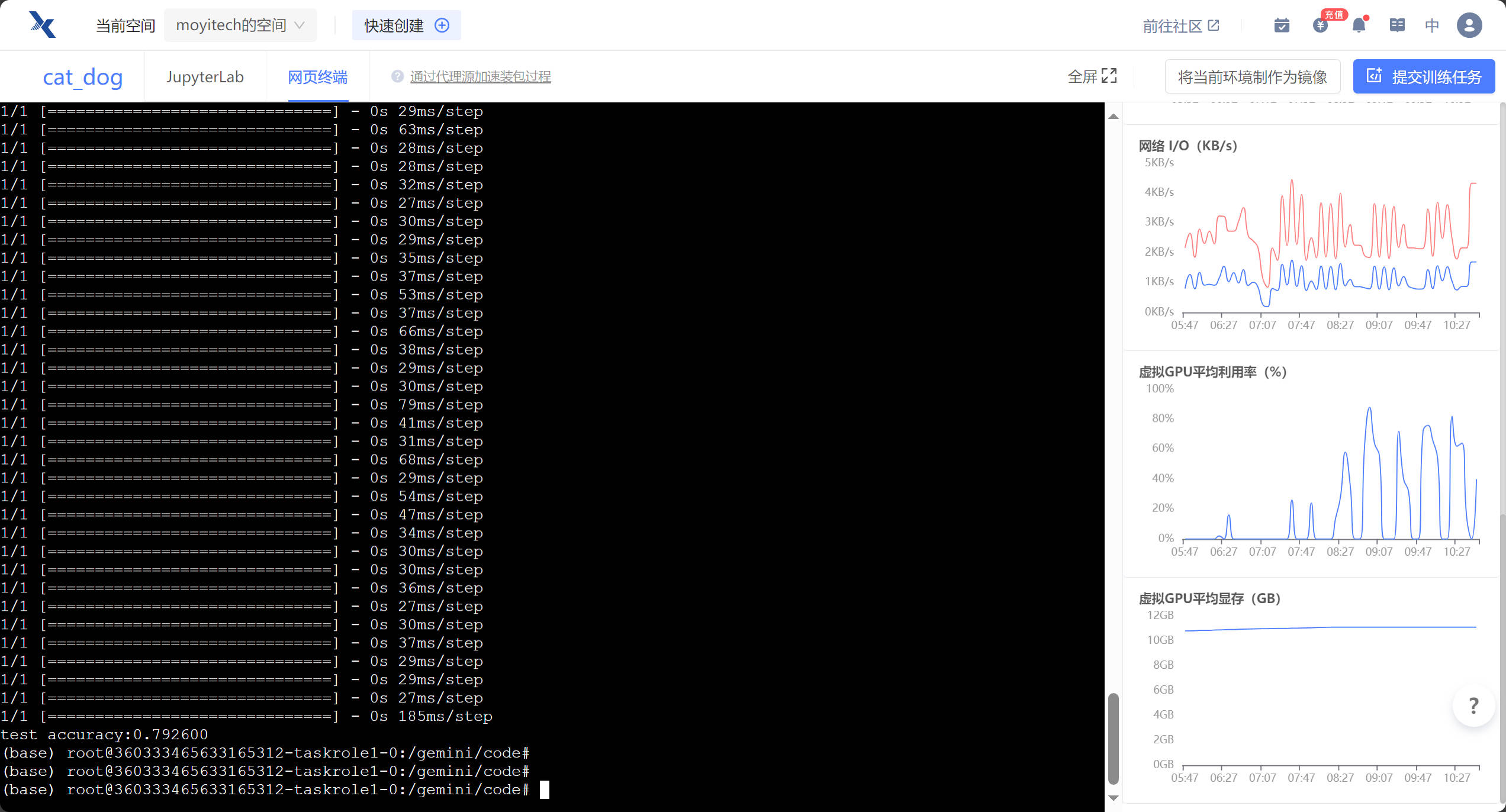

本次baseline使用了catboost,相比于上次的LightGBM,可以方便地调用显卡进行训练: 【Datawhale夏令营第二期】CatBoost如何使用GPU

在本次的baseline中,只需在cv_model函数中的line15中的params: dict中添加一个键即可:'task_type' : 'GPU'

代码解析

path = 'AI量化模型预测挑战赛公开数据/' # 数据目录

train_files = os.listdir(path+'train') # 获取目录下的文件

train_df = pd.DataFrame() # 定义一个空的DataFrame

for filename in tqdm.tqdm(train_files): # 遍历文件并使用tqdm显示

if os.path.isdir(path+'train/'+filename): # 先判断是否是缓存文件夹直接跳过,防止bug

continue

tmp = pd.read_csv(path+'train/'+filename) # 使用tmp变量存储读取转换后的DataFrame

tmp['file'] = filename # 记录文件名

train_df = pd.concat([train_df, tmp], axis=0, ignore_index=True) # 合并tmp数据到之前的df

test_files = os.listdir(path+'test')

test_df = pd.DataFrame()

for filename in tqdm.tqdm(test_files):

if os.path.isdir(path+'train/'+filename):

continue

tmp = pd.read_csv(path+'test/'+filename)

tmp['file'] = filename

test_df = pd.concat([test_df, tmp], axis=0, ignore_index=True)特征工程1.0

wap(Weighted Average Price)

加权平均价格 = (买价 买量 + 卖价 买量) / (买量 + 卖量)

# 计算wap数值

train_df['wap1'] = (train_df['n_bid1']*train_df['n_bsize1'] + train_df['n_ask1']*train_df['n_asize1'])/(train_df['n_bsize1'] + train_df['n_asize1'])

test_df['wap1'] = (test_df['n_bid1']*test_df['n_bsize1'] + test_df['n_ask1']*test_df['n_asize1'])/(test_df['n_bsize1'] + test_df['n_asize1'])

train_df['wap2'] = (train_df['n_bid2']*train_df['n_bsize2'] + train_df['n_ask2']*train_df['n_asize2'])/(train_df['n_bsize2'] + train_df['n_asize2'])

test_df['wap2'] = (test_df['n_bid2']*test_df['n_bsize2'] + test_df['n_ask2']*test_df['n_asize2'])/(test_df['n_bsize2'] + test_df['n_asize2'])

train_df['wap3'] = (train_df['n_bid3']*train_df['n_bsize3'] + train_df['n_ask3']*train_df['n_asize3'])/(train_df['n_bsize3'] + train_df['n_asize3'])

test_df['wap3'] = (test_df['n_bid3']*test_df['n_bsize3'] + test_df['n_ask3']*test_df['n_asize3'])/(test_df['n_bsize3'] + test_df['n_asize3'])

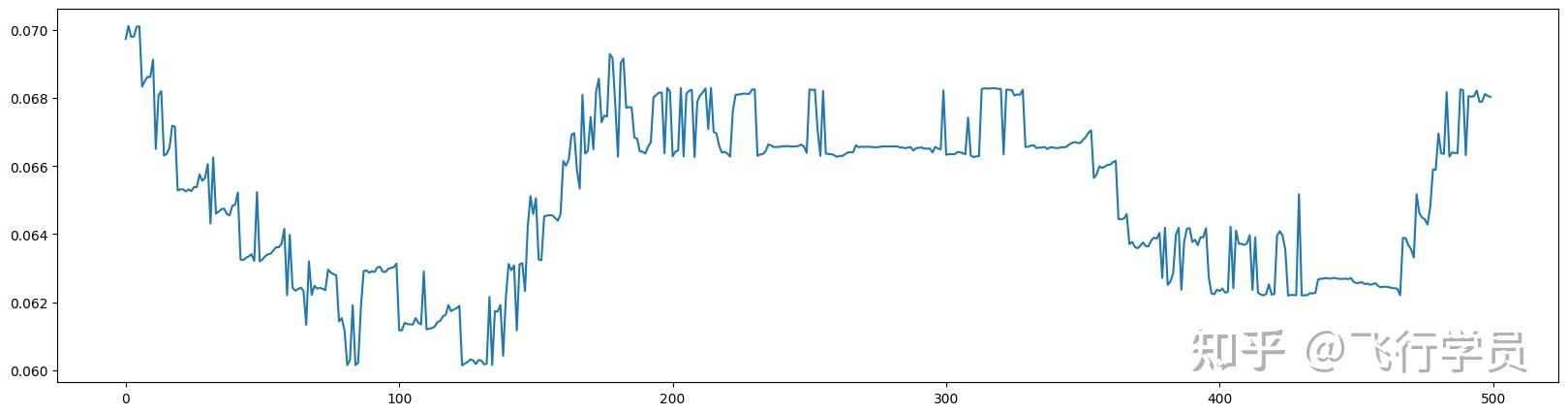

wap1

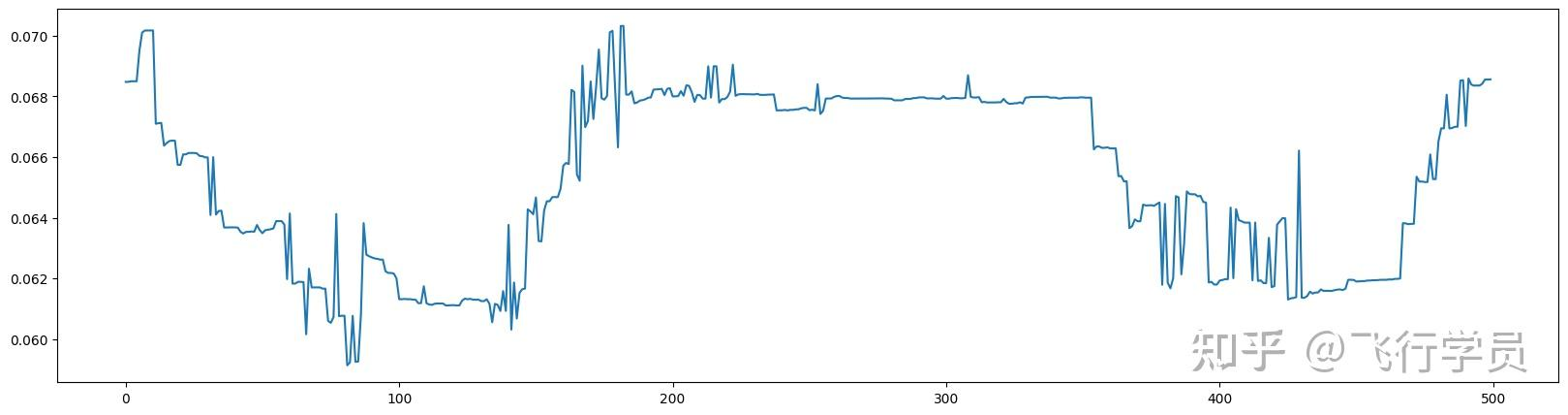

wap2

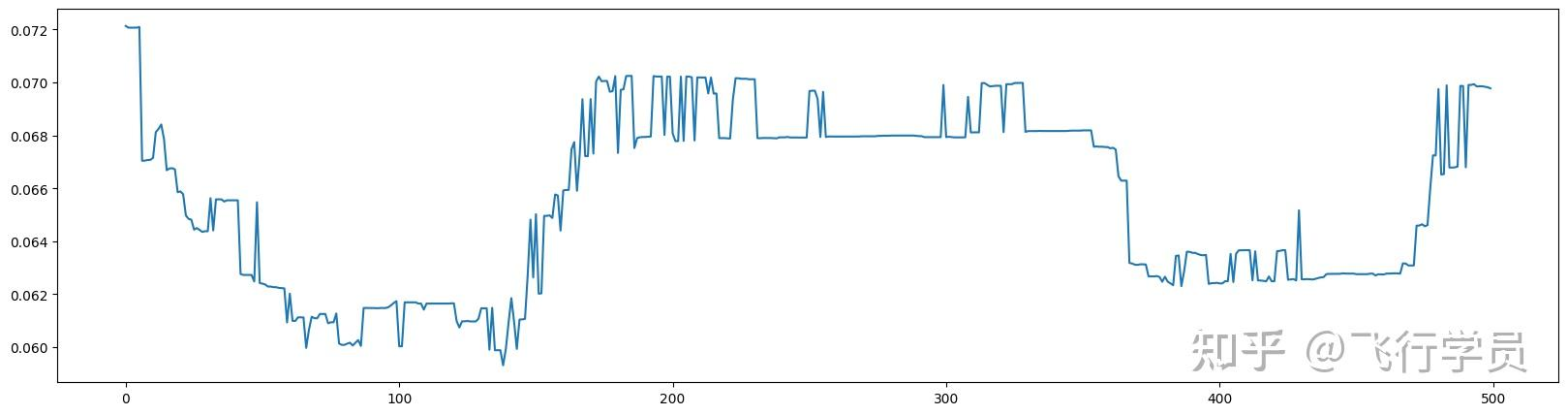

wap3

特征工程2.0

# 为了保证时间顺序的一致性,故进行排序

train_df = train_df.sort_values(['file','time'])

test_df = test_df.sort_values(['file','time'])

# 当前时间特征

# 围绕买卖价格和买卖量进行构建

# 暂时只构建买一卖一和买二卖二相关特征,进行优化时可以加上其余买卖信息

train_df['wap1'] = (train_df['n_bid1']*train_df['n_bsize1'] + train_df['n_ask1']*train_df['n_asize1'])/(train_df['n_bsize1'] + train_df['n_asize1'])

test_df['wap1'] = (test_df['n_bid1']*test_df['n_bsize1'] + test_df['n_ask1']*test_df['n_asize1'])/(test_df['n_bsize1'] + test_df['n_asize1'])

train_df['wap2'] = (train_df['n_bid2']*train_df['n_bsize2'] + train_df['n_ask2']*train_df['n_asize2'])/(train_df['n_bsize2'] + train_df['n_asize2'])

test_df['wap2'] = (test_df['n_bid2']*test_df['n_bsize2'] + test_df['n_ask2']*test_df['n_asize2'])/(test_df['n_bsize2'] + test_df['n_asize2'])

train_df['wap_balance'] = abs(train_df['wap1'] - train_df['wap2'])

train_df['price_spread'] = (train_df['n_ask1'] - train_df['n_bid1']) / ((train_df['n_ask1'] + train_df['n_bid1'])/2)

train_df['bid_spread'] = train_df['n_bid1'] - train_df['n_bid2']

train_df['ask_spread'] = train_df['n_ask1'] - train_df['n_ask2']

train_df['total_volume'] = (train_df['n_asize1'] + train_df['n_asize2']) + (train_df['n_bsize1'] + train_df['n_bsize2'])

train_df['volume_imbalance'] = abs((train_df['n_asize1'] + train_df['n_asize2']) - (train_df['n_bsize1'] + train_df['n_bsize2']))

test_df['wap_balance'] = abs(test_df['wap1'] - test_df['wap2'])

test_df['price_spread'] = (test_df['n_ask1'] - test_df['n_bid1']) / ((test_df['n_ask1'] + test_df['n_bid1'])/2)

test_df['bid_spread'] = test_df['n_bid1'] - test_df['n_bid2']

test_df['ask_spread'] = test_df['n_ask1'] - test_df['n_ask2']

test_df['total_volume'] = (test_df['n_asize1'] + test_df['n_asize2']) + (test_df['n_bsize1'] + test_df['n_bsize2'])

test_df['volume_imbalance'] = abs((test_df['n_asize1'] + test_df['n_asize2']) - (test_df['n_bsize1'] + test_df['n_bsize2']))

# 历史平移

# 获取历史信息

for val in ['wap1','wap2','wap_balance','price_spread','bid_spread','ask_spread','total_volume','volume_imbalance']:

for loc in [1,5,10,20,40,60]:

train_df[f'file_{val}_shift{loc}'] = train_df.groupby(['file'])[val].shift(loc)

test_df[f'file_{val}_shift{loc}'] = test_df.groupby(['file'])[val].shift(loc)

# 差分特征

# 获取与历史数据的增长关系

for val in ['wap1','wap2','wap_balance','price_spread','bid_spread','ask_spread','total_volume','volume_imbalance']:

for loc in [1,5,10,20,40,60]:

train_df[f'file_{val}_diff{loc}'] = train_df.groupby(['file'])[val].diff(loc)

test_df[f'file_{val}_diff{loc}'] = test_df.groupby(['file'])[val].diff(loc)

# 窗口统计

# 获取历史信息分布变化信息

# 可以尝试更多窗口大小已经统计方式,如min、max、median等

for val in ['wap1','wap2','wap_balance','price_spread','bid_spread','ask_spread','total_volume','volume_imbalance']:

train_df[f'file_{val}_win7_mean'] = train_df.groupby(['file'])[val].transform(lambda x: x.rolling(window=7, min_periods=3).mean())

train_df[f'file_{val}_win7_std'] = train_df.groupby(['file'])[val].transform(lambda x: x.rolling(window=7, min_periods=3).std())

test_df[f'file_{val}_win7_mean'] = test_df.groupby(['file'])[val].transform(lambda x: x.rolling(window=7, min_periods=3).mean())

test_df[f'file_{val}_win7_std'] = test_df.groupby(['file'])[val].transform(lambda x: x.rolling(window=7, min_periods=3).std())

# 时间相关特征

train_df['hour'] = train_df['time'].apply(lambda x:int(x.split(':')[0]))

test_df['hour'] = test_df['time'].apply(lambda x:int(x.split(':')[0]))

train_df['minute'] = train_df['time'].apply(lambda x:int(x.split(':')[1]))

test_df['minute'] = test_df['time'].apply(lambda x:int(x.split(':')[1]))

# 入模特征

cols = [f for f in test_df.columns if f not in ['uuid','time','file']]模型训练

def cv_model(clf, train_x, train_y, test_x, clf_name, seed = 2023):

folds = 5

kf = KFold(n_splits=folds, shuffle=True, random_state=seed)

oof = np.zeros([train_x.shape[0], 3])

test_predict = np.zeros([test_x.shape[0], 3])

cv_scores = []

for i, (train_index, valid_index) in enumerate(kf.split(train_x, train_y)):

print('************************************ {} ************************************'.format(str(i+1)))

trn_x, trn_y, val_x, val_y = train_x.iloc[train_index], train_y[train_index], train_x.iloc[valid_index], train_y[valid_index]

if clf_name == "cat":

params = {'learning_rate': 0.12, 'depth': 9, 'bootstrap_type':'Bernoulli','random_seed':2023,

'od_type': 'Iter', 'od_wait': 100, 'random_seed': 11, 'allow_writing_files': False,

'loss_function': 'MultiClass', 'task_type' : 'GPU'} # 使用'task_type' : 'GPU'可利用gpu加速训练

model = clf(iterations=2000, **params)

model.fit(trn_x, trn_y, eval_set=(val_x, val_y),

metric_period=200,

use_best_model=True,

cat_features=[],

verbose=1)

val_pred = model.predict_proba(val_x)

test_pred = model.predict_proba(test_x)

oof[valid_index] = val_pred

test_predict += test_pred / kf.n_splits

F1_score = f1_score(val_y, np.argmax(val_pred, axis=1), average='macro')

cv_scores.append(F1_score)

print(cv_scores)

return oof, test_predict

for label in ['label_5','label_10','label_20','label_40','label_60']:

print(f'=================== {label} ===================')

cat_oof, cat_test = cv_model(CatBoostClassifier, train_df[cols], train_df[label], test_df[cols], 'cat')

train_df[label] = np.argmax(cat_oof, axis=1)

test_df[label] = np.argmax(cat_test, axis=1)疑问

为什么没有使用rnn或lstm进行预测?

评论 (0)