前言

又又又参加了Datawhale的AI夏令营第二期的机器学习赛道~,没错这次还是机器学习(外加运营助教)

baseline:https://aistudio.baidu.com/aistudio/projectdetail/6618108

赛事任务

基于提供的样本构建模型,预测用户的新增情况

数据说明

- udmap: 以dict形式给出,需要自定义函数解析

- common_ts: 事件发生时间,可使用df.dt进行解析

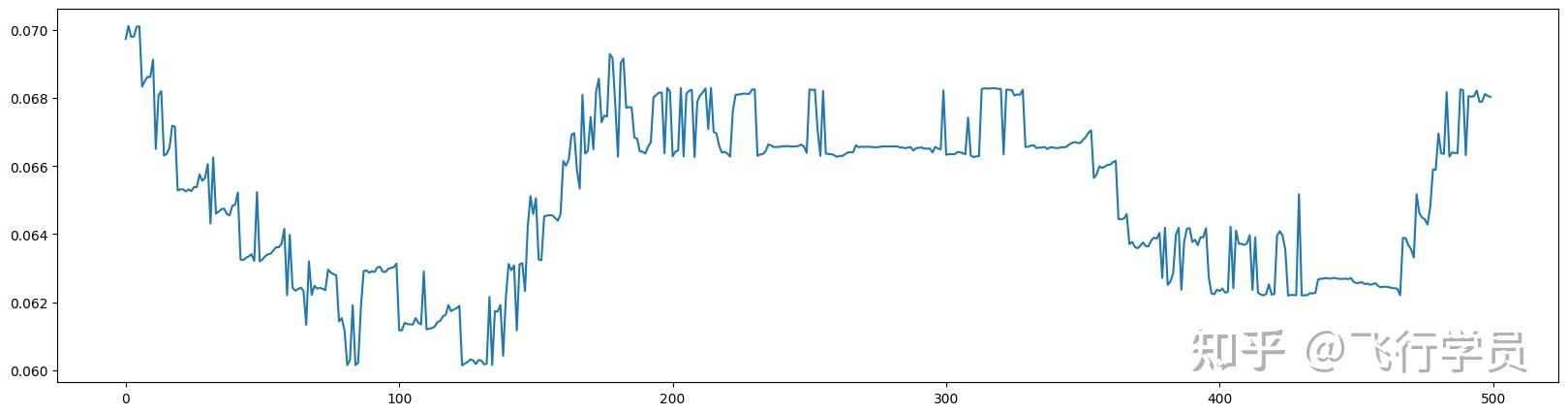

- x1-x8: 某种特征,但不清楚含义,可通过后续画图分析进行处理

- target: 预测目标0或1二分类

评估指标

还是f1,F1 score解释: https://www.9998k.cn/archives/169.html

F1 score

F1 score = 2 * (precision * recall) / (precision + recall)

precision and recall

第一次看的时候还不太懂precision和recall的含义,也总结一下

首先定义以下几个概念:

TP(True Positive):真阳性

TN (True Negative) : 真阴性

FP(False Positive):假阳性

FN(False Negative):假阴性precision = TP / (TP + FP)

recall = TP / (TP + FN)

accuracy = (TP + TN) / (TP + TN + FP + FN)

分析

baseline分析

跑出来是0.62+

- 使用了决策树模型进行训练

- 对udmap特征进行了提取

- 计算了eid的freq和mean

- 通过df.dt提取了hour信息

特征提取

除去baseline的特征外,又借鉴了锂电池那次的baseline,提取了如下特征:

- dayofweek

- weekofyear

- dayofyear

- is_weekend

调整后的分数为:0.75

train_data['common_ts_hour'] = train_data['common_ts'].dt.hour

test_data['common_ts_hour'] = test_data['common_ts'].dt.hour

train_data['common_ts_minute'] = train_data['common_ts'].dt.minute + train_data['common_ts_hour'] * 60

test_data['common_ts_minute'] = test_data['common_ts'].dt.minute + test_data['common_ts_hour'] * 60

train_data['dayofweek'] = train_data['common_ts'].dt.dayofweek

test_data['dayofweek'] = test_data['common_ts'].dt.dayofweek

train_data["weekofyear"] = train_data["common_ts"].dt.isocalendar().week.astype(int)

test_data["weekofyear"] = test_data["common_ts"].dt.isocalendar().week.astype(int)

train_data["dayofyear"] = train_data["common_ts"].dt.dayofyear

test_data["dayofyear"] = test_data["common_ts"].dt.dayofyear

train_data["day"] = train_data["common_ts"].dt.day

test_data["day"] = test_data["common_ts"].dt.day

train_data['is_weekend'] = train_data['dayofweek'] // 6

test_data['is_weekend'] = test_data['dayofweek'] // 6x1-x8特征提取

train_data['x1_freq'] = train_data['x1'].map(train_data['x1'].value_counts())

test_data['x1_freq'] = test_data['x1'].map(train_data['x1'].value_counts())

test_data['x1_freq'].fillna(test_data['x1_freq'].mode()[0], inplace=True)

train_data['x1_mean'] = train_data['x1'].map(train_data.groupby('x1')['target'].mean())

test_data['x1_mean'] = test_data['x1'].map(train_data.groupby('x1')['target'].mean())

test_data['x1_mean'].fillna(test_data['x1_mean'].mode()[0], inplace=True)

train_data['x2_freq'] = train_data['x2'].map(train_data['x2'].value_counts())

test_data['x2_freq'] = test_data['x2'].map(train_data['x2'].value_counts())

test_data['x2_freq'].fillna(test_data['x2_freq'].mode()[0], inplace=True)

train_data['x2_mean'] = train_data['x2'].map(train_data.groupby('x2')['target'].mean())

test_data['x2_mean'] = test_data['x2'].map(train_data.groupby('x2')['target'].mean())

test_data['x2_mean'].fillna(test_data['x2_mean'].mode()[0], inplace=True)

train_data['x3_freq'] = train_data['x3'].map(train_data['x3'].value_counts())

test_data['x3_freq'] = test_data['x3'].map(train_data['x3'].value_counts())

test_data['x3_freq'].fillna(test_data['x3_freq'].mode()[0], inplace=True)

train_data['x4_freq'] = train_data['x4'].map(train_data['x4'].value_counts())

test_data['x4_freq'] = test_data['x4'].map(train_data['x4'].value_counts())

test_data['x4_freq'].fillna(test_data['x4_freq'].mode()[0], inplace=True)

train_data['x6_freq'] = train_data['x6'].map(train_data['x6'].value_counts())

test_data['x6_freq'] = test_data['x6'].map(train_data['x6'].value_counts())

test_data['x6_freq'].fillna(test_data['x6_freq'].mode()[0], inplace=True)

train_data['x6_mean'] = train_data['x6'].map(train_data.groupby('x6')['target'].mean())

test_data['x6_mean'] = test_data['x6'].map(train_data.groupby('x6')['target'].mean())

test_data['x6_mean'].fillna(test_data['x6_mean'].mode()[0], inplace=True)

train_data['x7_freq'] = train_data['x7'].map(train_data['x7'].value_counts())

test_data['x7_freq'] = test_data['x7'].map(train_data['x7'].value_counts())

test_data['x7_freq'].fillna(test_data['x7_freq'].mode()[0], inplace=True)

train_data['x7_mean'] = train_data['x7'].map(train_data.groupby('x7')['target'].mean())

test_data['x7_mean'] = test_data['x7'].map(train_data.groupby('x7')['target'].mean())

test_data['x7_mean'].fillna(test_data['x7_mean'].mode()[0], inplace=True)

train_data['x8_freq'] = train_data['x8'].map(train_data['x8'].value_counts())

test_data['x8_freq'] = test_data['x8'].map(train_data['x8'].value_counts())

test_data['x8_freq'].fillna(test_data['x8_freq'].mode()[0], inplace=True)

train_data['x8_mean'] = train_data['x8'].map(train_data.groupby('x8')['target'].mean())

test_data['x8_mean'] = test_data['x8'].map(train_data.groupby('x8')['target'].mean())

test_data['x8_mean'].fillna(test_data['x8_mean'].mode()[0], inplace=True)实测使用众数填充会比0填充好一点

实测分数 0.76398

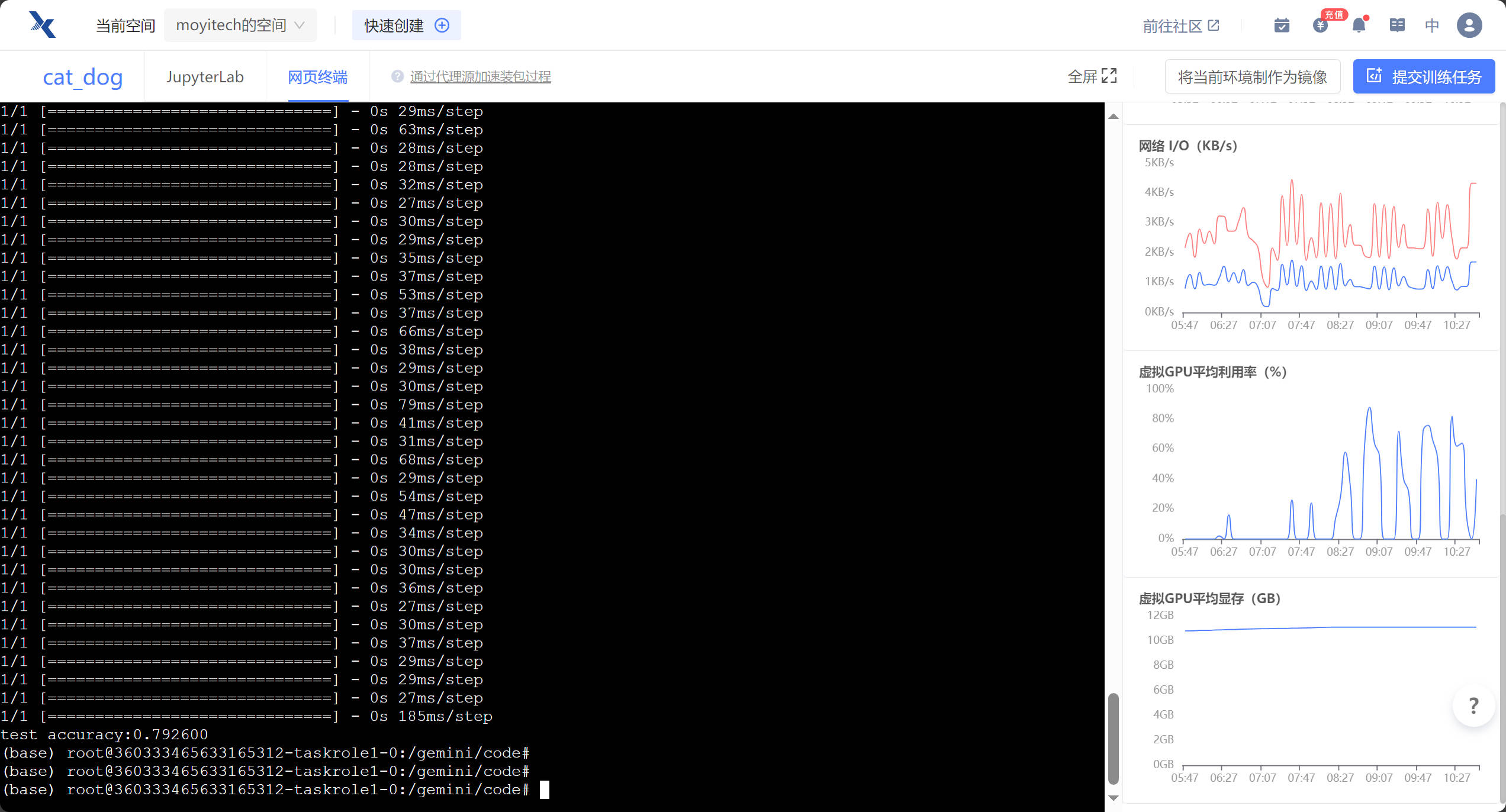

无脑大招:AutoGluon

直接上代码:

import pandas as pd

import numpy as np

train_data = pd.read_csv('用户新增预测挑战赛公开数据/train.csv')

test_data = pd.read_csv('用户新增预测挑战赛公开数据/test.csv')

#autogluon

from autogluon.tabular import TabularDataset, TabularPredictor

clf = TabularPredictor(label='target')

clf.fit(

TabularDataset(train_data.drop(['uuid'], axis=1)),

)

print("预测的正确率为:",clf.evaluate(

TabularDataset(train_data.drop(['uuid'], axis=1)),

)

)

pd.DataFrame({

'uuid': test_data['uuid'],

'target': clf.predict(test_data.drop(['uuid'], axis=1))

}).to_csv('submit.csv', index=None)AutoGluon分数:0.79868

使用x1-x8识别用户特征

参考自Ivan大佬

import pandas as pd

import numpy as np

train_data = pd.read_csv('用户新增预测挑战赛公开数据/train.csv')

test_data = pd.read_csv('用户新增预测挑战赛公开数据/test.csv')

user_df = train_data.groupby(by=['x1', 'x2', 'x3', 'x4', 'x5', 'x6', 'x7', 'x8'])['target'].mean().reset_index(

name='user_prob')

from sklearn.tree import DecisionTreeClassifier

for i in range(user_df.shape[0]):

x1 = user_df.iloc[i, 0]

x2 = user_df.iloc[i, 1]

x3 = user_df.iloc[i, 2]

x4 = user_df.iloc[i, 3]

x5 = user_df.iloc[i, 4]

x6 = user_df.iloc[i, 5]

x7 = user_df.iloc[i, 6]

x8 = user_df.iloc[i, 7]

sub_train = train_data.loc[

(train_data['x1'] == x1) & (train_data['x2'] == x2) &

(train_data['x3'] == x3) & (train_data['x4'] == x4) &

(train_data['x5'] == x5) & (train_data['x6'] == x6) &

(train_data['x7'] == x7) & (train_data['x8'] == x8)

]

sub_test = test_data.loc[

(test_data['x1'] == x1) & (test_data['x2'] == x2) &

(test_data['x3'] == x3) & (test_data['x4'] == x4) &

(test_data['x5'] == x5) & (test_data['x6'] == x6) &

(test_data['x7'] == x7) & (test_data['x8'] == x8)

]

# print(sub_train.columns)

clf = DecisionTreeClassifier()

clf.fit(

sub_train.loc[:, ['eid', 'common_ts']],

sub_train['target']

)

try:

test_data.loc[

(test_data['x1'] == x1) & (test_data['x2'] == x2) &

(test_data['x3'] == x3) & (test_data['x4'] == x4) &

(test_data['x5'] == x5) & (test_data['x6'] == x6) &

(test_data['x7'] == x7) & (test_data['x8'] == x8),

['target']

] = clf.predict(

test_data.loc[

(test_data['x1'] == x1) & (test_data['x2'] == x2) &

(test_data['x3'] == x3) & (test_data['x4'] == x4) &

(test_data['x5'] == x5) & (test_data['x6'] == x6) &

(test_data['x7'] == x7) & (test_data['x8'] == x8),

['eid', 'common_ts']]

)

except:

pass

test_data.fillna(0, inplace=True)

test_data['target'] = test_data.target.astype(int)

test_data[['uuid','target']].to_csv('submit_2.csv', index=None)实测分数:0.831

最后 修改代码,把fillna替换为如下代码

from sklearn.tree import DecisionTreeClassifier

clf = DecisionTreeClassifier()

clf.fit(

train_data.drop(['udmap', 'common_ts', 'uuid', 'target', 'common_ts_hour'], axis=1),

train_data['target']

)

test_data.loc[pd.isna(test_data['target']),'target'] = \

clf.predict(

test_data.loc[

pd.isna(test_data['target']),

test_data.drop(['udmap', 'common_ts', 'uuid', 'target', 'common_ts_hour'], axis=1).columns]

)最终分数: 0.8321

评论 (0)