论文简介

标题:Augmentation-Adapted Retriever Improves Generalization of Language Models as Generic Plug-In

摘要:

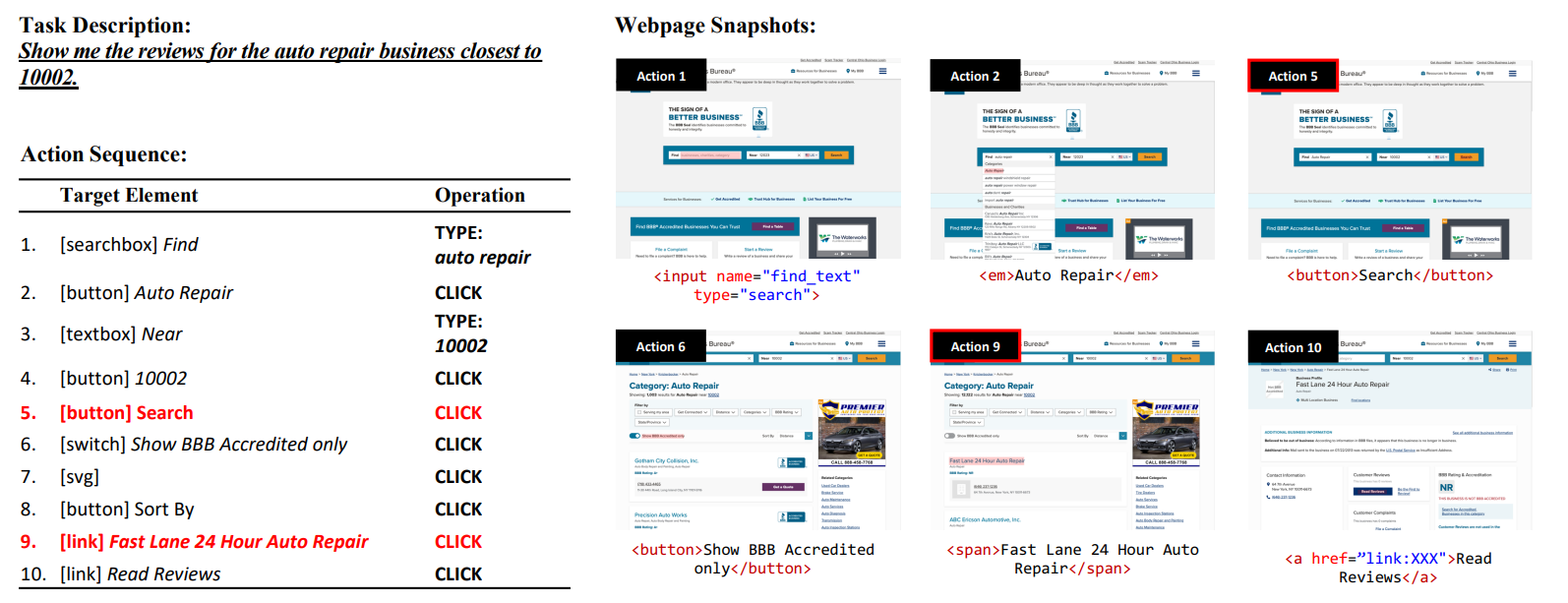

Retrieval augmentation can aid language models (LMs) in knowledge-intensive tasks by supplying them with external information. Prior works on retrieval augmentation usually jointly fine-tune the retriever and the LM, making them closely coupled. In this paper, we explore the scheme of generic retrieval plug-in: the retriever is to assist target LMs that may not be known beforehand or are unable to be fine-tuned together. To retrieve useful documents for unseen target LMs, we propose augmentation-adapted retriever (AAR), which learns LM’s preferences obtained from a known source LM. Experiments on the MMLU and PopQA datasets demonstrate that our AAR trained with a small source LM is able to significantly improve the zero-shot generalization of larger target LMs ranging from 250M Flan-T5 to 175B InstructGPT. Further analysis indicates that the preferences of different LMs overlap, enabling AAR trained with a single source LM to serve as a generic plug-in for various target LMs. Our code is open-sourced at https://github.com/OpenMatch/AugmentationAdapted-Retriever.

一种名为“Augmentation-Adapted Retriever(AAR)”的方法,用于改进语言模型在无监督任务中的泛化能力。AAR 旨在为无法联合微调的黑盒大模型(如GPT)提供外部信息支持,并增强其在知识密集型任务中的表现。与现有的检索增强方法不同,AAR 通过从一个较小的已知模型(source LM)学习目标模型的偏好,从而适应不同的大模型。文章通过在 MMLU 和 PopQA 数据集上的实验表明,AAR 能显著提升目标大模型的零样本泛化能力,并且能够作为一个通用插件为各种目标模型服务。

论文解读

在现在的RAG任务中,包含了Retriever和LLM两个部分,通常情况下二者是分别进行预训练的,Retriever负责从嵌入的向量数据库中检索最相关的文档或片段,LLM负责生成。RAG和fine-tuning并不冲突,通过fine-tuning可以让Retriever和LLM紧密耦合,提升RAG系统的性能。这种方法适用于白盒子模型,但是对于提供线上API的黑盒子模型,我们多数情况下无法进行fine-tuning,所以本文提出了AAR方法,可以在Retriever更符合LLM的需要,从而无需对黑盒子模型进行fine-tuning。

我们现在的Retriever一般使用的text embedding模型是通过对比学习进行训练。数据集的是使用人工标注的Positive Doc和自动筛选出来的Negative Doc(如ANCE)进行训练,由此得到的Retriever是满足了人工筛选的偏好,而不是LLM的偏好。由于LLM的训练数据有很大的overlap,所以我们可以使用一个很小的source LM(如Flan-T5)的偏好来替代LLM的偏好,那么Positive Docs就是人工标注doc和通过source LM计算出偏好更高的doc的并集,Negtive Docs就是沿用了ANCE的结果。

对于这篇文章,我的理解是。其实作者借鉴了ANCE的思想做了一个Positive Doc的训练增强,从而提升了text embedding模型的检索能力,使其能够检索到更多source LM(或LLM)喜欢的docs。

评论 (0)